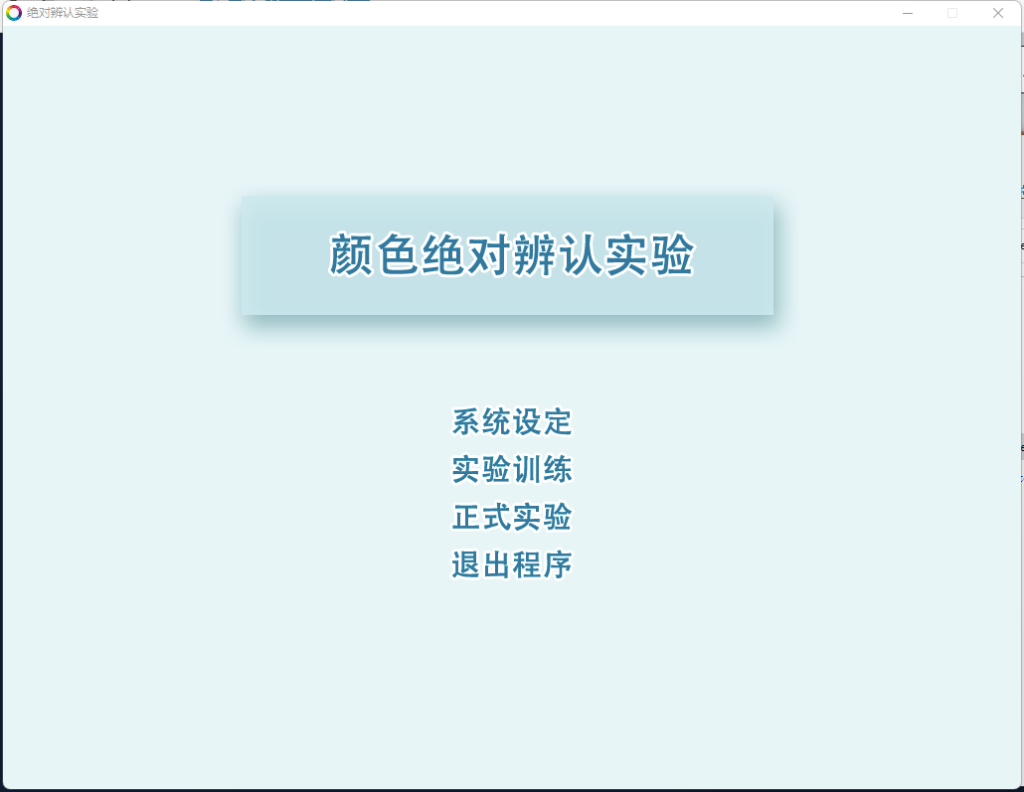

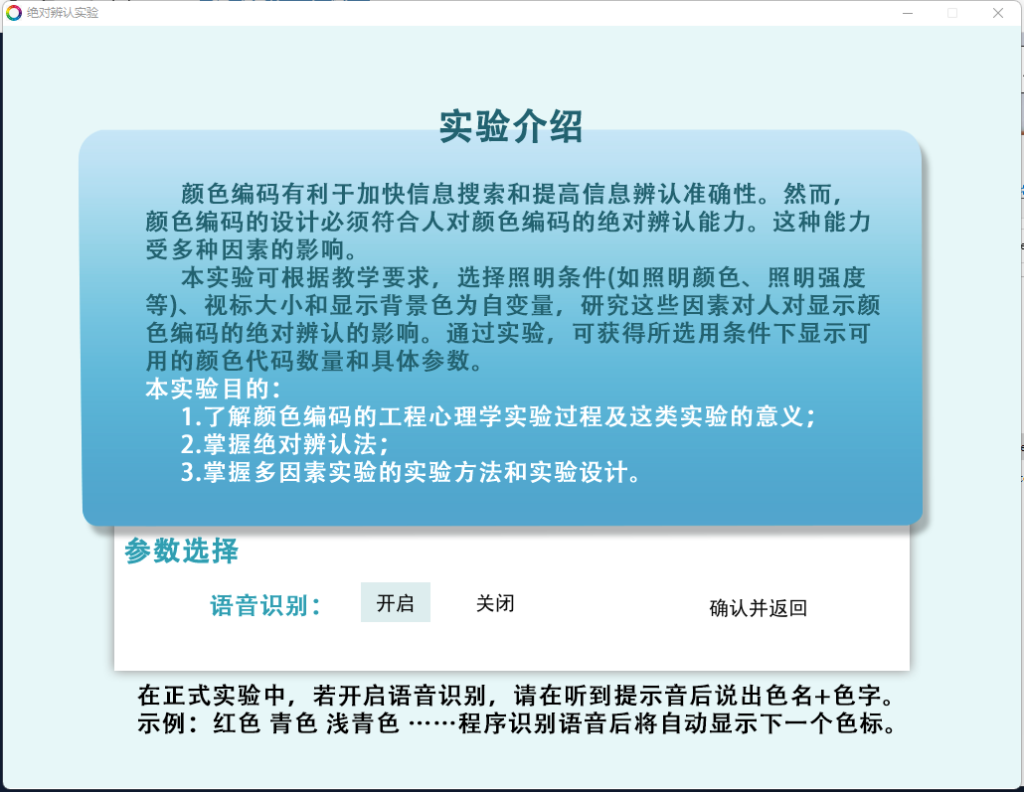

A challenging programming project on human factors made for Prof. Gao, Department of Psychology and Behavioral Sciences, Zhejiang University. Will be published in a textbook that he is working right now. The code is written in Python.

The program is designed to investigate the effects of color block sizes and lighting conditions on human color perception. Offline speech recognition algorithm is needed to receive and analyze user’s voice signal. I evaluated and selected PocketSphinx, a Python package based on Hidden Markov Model from Carnegie Mellon University, and fine-tuned it on several task-related words to embrace Chinese speech recognition. Also, I designed the User Interface of the program.

#Since the whole program is somehow confidential here I only show a few parts of the code

#define functional button

class Button():

buttonsets = {}

dict_active_tag = {}

def __init__(self,setnum):

self.mouse = None

self.click = (0,0,0)

self.pressed = False

self.buttonsetnum = setnum

nowsettag = Button.buttonsets.setdefault(setnum,0)

Button.buttonsets[setnum] = nowsettag + 1

self.buttoninsettag = Button.buttonsets[setnum]

def active(self, msg, signal, x, y, w, h, ic, ac, pc = (255, 255, 255), action = None, *args):

#msg: text;

#x,y: position w,h: size

#ic,ac,pc: inactive/active/pressed color

#action: to-do function after mouse press

#args: args pass to action

self.mouse = pygame.mouse.get_pos()

self.click = (0,0,0)

self.click = pygame.mouse.get_pressed()

if x + w > self.mouse[0] > x and y + h > self.mouse[1] > y:

self.draw(ac,signal,x, y, w, h)

if self.click[0] == True and action != None:

action(*args)

self.pressed = True

Button.dict_active_tag.setdefault(self.buttonsetnum,0)

Button.dict_active_tag[self.buttonsetnum] = self.buttoninsettag

elif self.pressed and Button.dict_active_tag[self.buttonsetnum] == self.buttoninsettag:

self.draw(pc,signal,x, y, w, h)

elif self.pressed:

self.draw(ic,signal,x, y, w, h)

self.pressed = False

else:

self.draw(ic,signal,x, y, w, h)

smallText = pygame.font.SysFont('simhei', 20)

textSurf, textRect = text_objects(msg, smallText,(10,10,10))

textRect.center = ( (x+(w/2)), (y+(h/2)))

win.blit(textSurf, textRect)

def draw(self,c,signal,x, y, w, h):

if signal:

pygame.draw.rect(win, c, (x,y,w,h))

#Speech Recognition

p = pyaudio.PyAudio()

stream = p.open(format=FORMAT,

channels=CHANNELS,

rate=RATE,

input=True,

frames_per_buffer=CHUNK)

wf = wave.open(wave_out_path, 'wb')

wf.setnchannels(CHANNELS)

wf.setsampwidth(p.get_sample_size(FORMAT))

wf.setframerate(RATE)

for _ in range(0, int(RATE / CHUNK * 2)):

data = stream.read(CHUNK)

wf.writeframes(data)

stream.stop_stream(); stream.close(); p.terminate(); wf.close()

#RECOG

recognizer = speech.Recognizer()

with speech.Microphone() as source:

recognizer.adjust_for_ambient_noise(source)

coloraudio = speech.AudioFile(wave_out_path)

with coloraudio as source:

audio = recognizer.record(source)

try:

resultstr = recognizer.recognize_sphinx(audio, language='zh-CN')

if " " not in resultstr and resultstr != "":

resultstr = "result is:" + resultstr

else:

resultstr = "Please Check?More than one word:" + resultstr

except speech.UnknownValueError:

resultstr = "Unable to recognize"

except speech.RequestError as e:

resultstr = "Fatal Error; {0}".format(e)

with open(os.path.join('data', str(trail.size)+'.txt'),'a',encoding='utf-8') as resultf:

resultf.writelines(str(resultcnt) + resultstr + '\n')

resultcnt += 1

return resultstr